Artificial intelligence has moved from experimental to operational across security programs. The technology now sits inside SIEM platforms, EDR tools, identity systems, and SOAR playbooks.

If you're working in a SOC or building out a security program, you've likely already encountered AI-powered features in your tooling. But understanding how these systems actually work matters if you're going to use them effectively.

This article breaks down what AI does in security contexts, the problems it solves, the risks it introduces, and where the technology is heading. By the end, you'll have a clear picture of where AI fits in your security stack and what skills you'll need to work with it.

Let's see how AI benefits cyber security, and what risks it poses.

The Role of AI in Cyber Security

When we talk about AI in security, we're referring to machine learning models that learn what "normal" looks like across your environment and flag deviations worth investigating. Rather than relying on static rules, these systems adapt based on the data they see.

You'll find AI technology embedded across the security stack now:

- Network monitoring tools use it to analyze traffic flows

- EDR platforms use it to classify process behavior

- Email gateways use it to catch phishing attempts that slip past rule-based filters

- Identity systems use it to build behavioral baselines and flag anomalies like impossible travel logins

Your SIEM might use ML to cluster related events and surface high-priority incidents. Your EDR might embed models that recognize ransomware behavior even without a known signature. Your SOAR platform might use AI-generated risk scores to decide which playbooks to trigger.

One point worth emphasizing: AI augments your existing security architecture. It doesn't replace network segmentation, strong identity controls, patching discipline, or backup strategies. Those fundamentals still matter.

If you're deploying AI capabilities, governance frameworks like NIST's AI Risk Management Framework help you manage risks around data quality, model drift, and transparency. These aren't optional considerations - they're what separate effective AI deployments from expensive mistakes.

How AI Benefits Cyber Security

The value of AI in security comes down to solving problems that traditional approaches handle poorly:

- Scale - Rule-based systems can't keep up with modern data volumes

- Fatigue - Human analysts burn out on repetitive triage

- Coverage - Signature-based detection misses novel threats

AI addresses each of these gaps by analyzing more data, faster, and more consistently than human teams or static rules can.

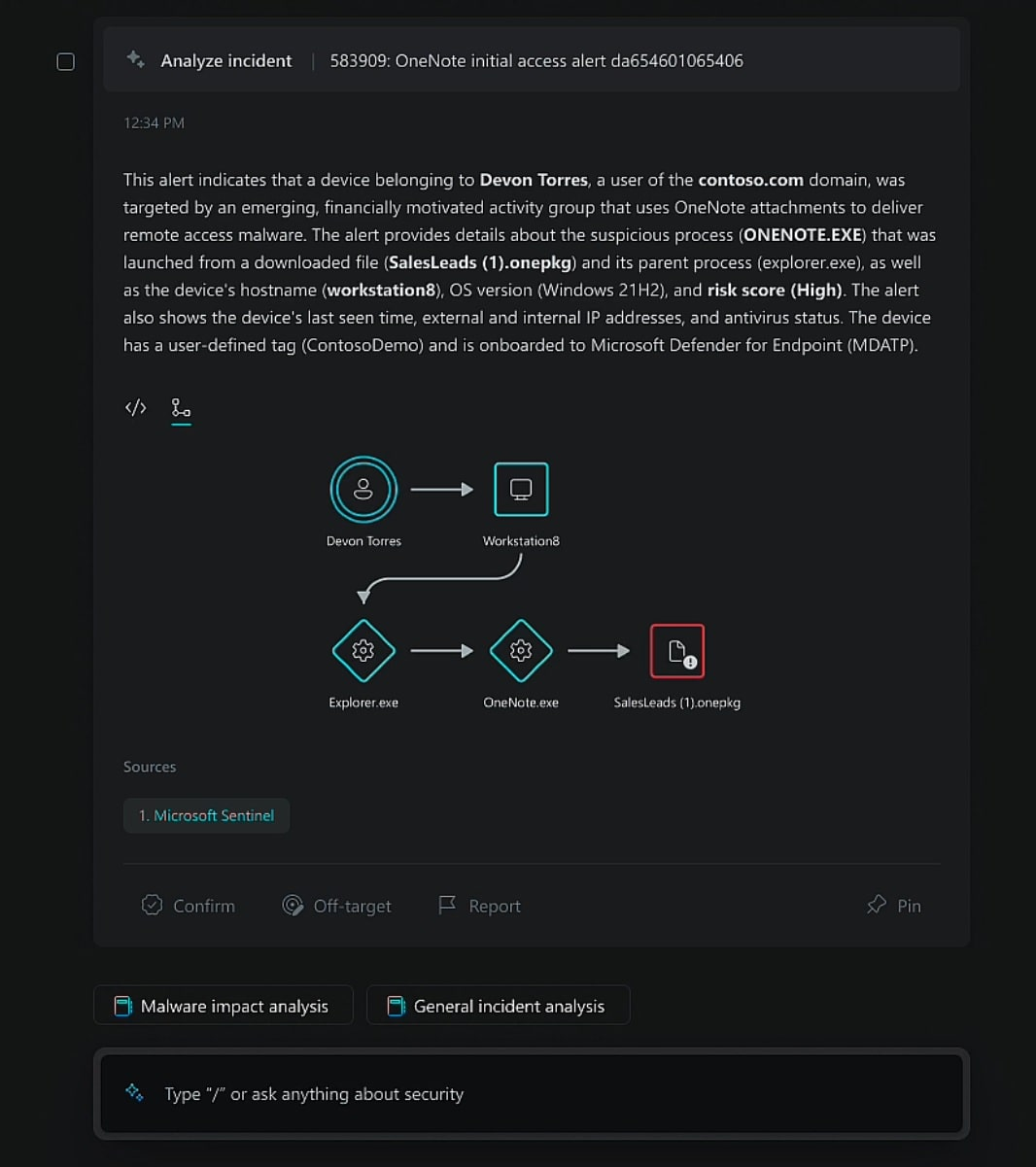

From Introducing Microsoft Security Copilot

Enhanced Threat Detection

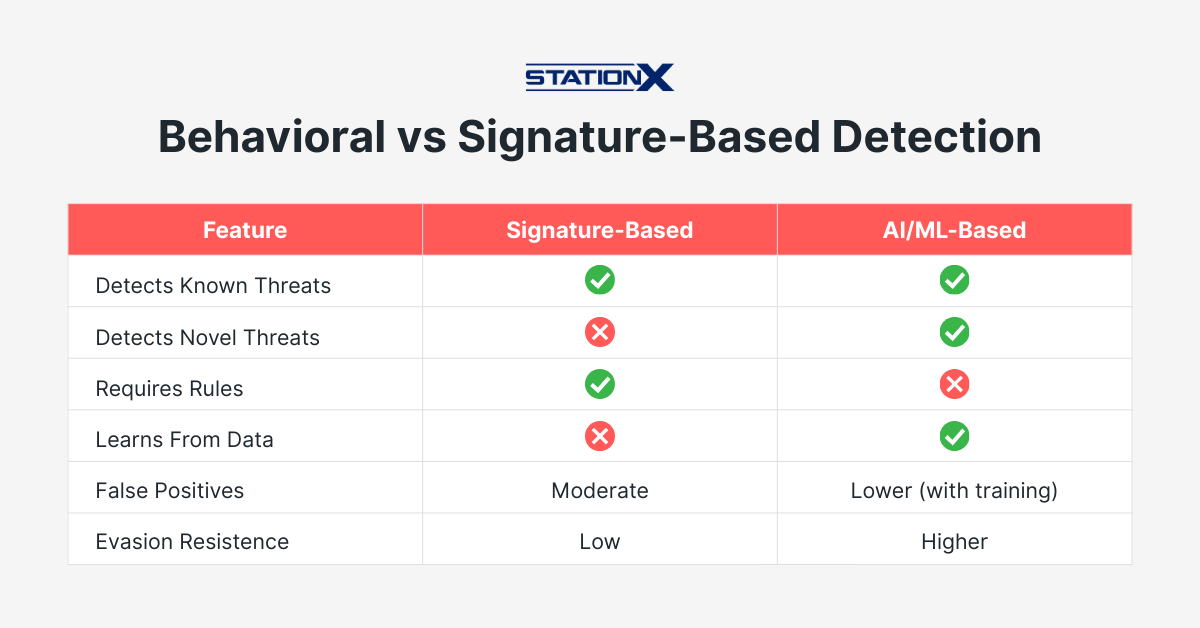

Traditional detection relies on signatures - known indicators that match documented threats. If an attack doesn't match a signature, it slips through, making emerging threats difficult to defend against. Attackers know this and deliberately modify their tools to evade static detection.

AI-based detection works differently.

Machine learning models trained on large datasets learn to recognize suspicious patterns regardless of whether they've seen that specific variant before. Your EDR can flag a process exhibiting ransomware-like activity - rapid file enumeration, encryption operations, deletion of shadow copies - even if the malware binary is brand new.

User and Entity Behavior Analytics takes a similar approach to identity security. UEBA engines build baselines for how users and devices normally behave, then flag anomalies like unusual file access or atypical administrative actions. This is particularly valuable for compromised accounts and insider threats, where the attacker is using legitimate credentials.

The scale advantage matters here. Mastercard processes roughly 160 billion transactions annually, scoring each one in about 50 milliseconds to detect fraud patterns. The same mechanics (pattern recognition, risk scoring, anomaly detection) power enterprise security tools.

No human security teams could review that volume in real time, but the underlying approach translates directly to threat detection in your environment.

AI doesn't eliminate the need for signatures or rules. It adds a layer that catches what those approaches miss - the behavioral anomalies that indicate something is wrong even when no specific indicator matches.

Automated Incident Response

When a security event fires, response time directly impacts damage. The faster you contain a compromised endpoint or block a malicious IP, the less opportunity an attacker has to move laterally or deploy ransomware.

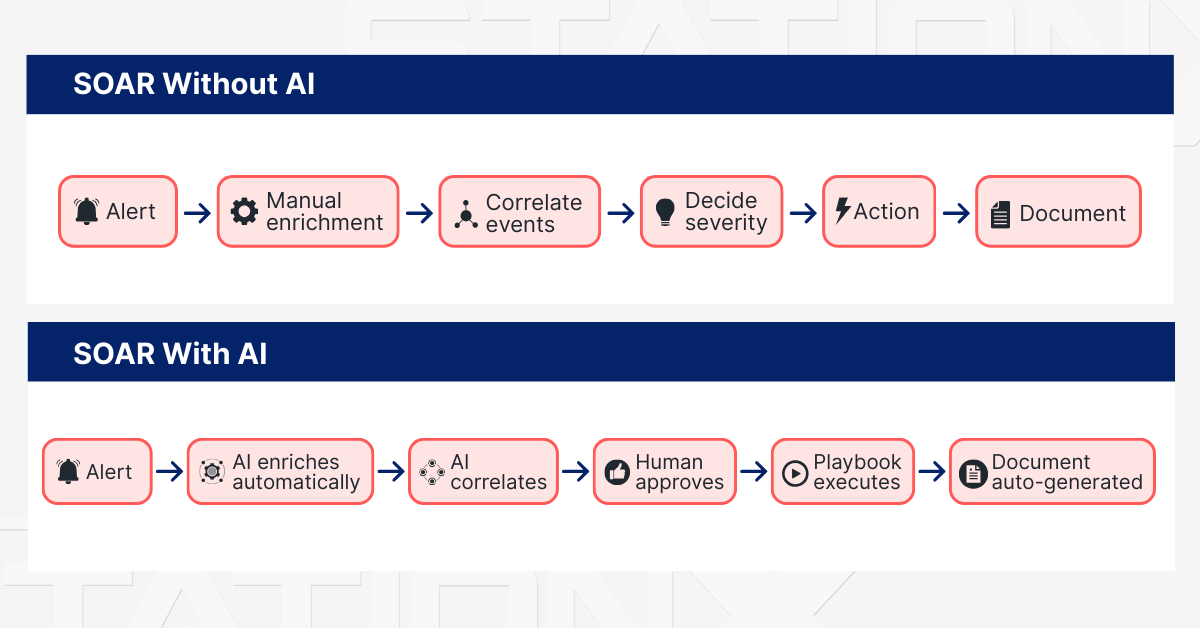

Traditional incident response requires analysts to manually review alerts, gather context, make containment decisions, and execute response actions. Each step takes time. At 3 AM on a holiday weekend, that alert might sit for hours before anyone sees it.

AI-assisted automation changes the equation.

SOAR platforms now use AI to enrich alerts with contextual data, cluster related events into unified incidents, assess severity, and either recommend or execute response playbooks automatically.

The results in mature implementations are significant:

- A fintech company deploying D3 Security's AI-driven SOAR reported a 10x improvement in mean time to response

- The same deployment automated 90% of Tier-1 SOC activity - enrichment, initial containment, and notification workflows

- One Elastic study reports that customers using AI-powered Attack Discovery cut investigation time by 34%

AI handles the mechanical work: threat intelligence lookups, user and device context, asset inventory checks, playbook triggers. You still own the high-stakes decisions - whether to isolate a business-critical server, how to communicate with stakeholders, when to escalate. Human intertvention isn’t removed from the equation.

CompTIA CySA+ Training Bundle

Conquer your exam with the CompTIA CySA+ Course Bundle. With 15 hours of training, 8 practice exams, and study flashcards, you can tackle the CySA+ exam with confidence.

Predictive Analysis and Proactive Defense

Traditional detection fires once something bad is already happening. Predictive systems aim to spot the precursors.

AI-powered threat intelligence platforms correlate data from multiple sources: vulnerability disclosures, exploit activity in the wild, dark web chatter, and your own environment's exposure.

What predictive systems actually do:

- Forecast which vulnerabilities are being actively targeted so you can prioritize patching

- Hunt for patterns aligned with MITRE ATT&CK techniques before detection rules fire

- Identify precursors like reconnaissance activity, misconfigurations, and exposed credentials

IBM's X-Force uses ML to help organizations prioritize based on actual security threats and activity rather than just CVSS scores. Managed XDR services like Rackspace's RAISE engine continuously hunt across customer environments and trigger proactive containment.

The predictions are probabilistic. AI can tell you a vulnerability in your environment is being actively exploited elsewhere. It can't guarantee you'll be targeted. You still need risk management processes to weigh recommendations against business context.

Improved Accuracy and Reduced False Positives

Alert fatigue is one of the most documented problems in security operations.

The numbers tell the story:

- Organizations receive an average of 11,000 security alerts daily

- Up to 70% prove to be false positives in traditional setups

- Analysts spend roughly a quarter of their time chasing noise

Over time, this degrades vigilance. Real threats get missed because they're buried in low-value events. Analyst burnout becomes a retention problem, draining your human resources.

AI directly addresses this by learning to distinguish genuine threats from benign anomalies. Models trained on analyst feedback continuously refine their scoring - suppressing low-risk events and elevating high-confidence detections.

The improvements in well-implemented deployments are real:

- A global financial services firm using Avatier's AI-driven identity security reported an 85% reduction in alert volume

- Time-to-resolution for genuine incidents improved by 4.5x

- Elastic reports defense customers reduced daily alerts from over 1,000 to approximately eight actionable discoveries

These numbers depend on data quality, integration depth, and SOC maturity. But the pattern is consistent: properly trained AI models reduce noise so you can focus on threats that actually require human judgment.

Challenges and Risks of AI in Cyber Security

AI introduces its own attack surface and failure modes. Understanding these risks matters as much as understanding the benefits.

Adversarial Attacks

These aren't theoretical - they show up in real-world systems.

AI systems can be targeted directly through techniques designed to make models misbehave:

- Data poisoning - Inserting malicious records into training data to degrade performance or create backdoor triggers

- Model extraction - Querying a model repeatedly to reverse-engineer its decision logic or identify blind spots

- Prompt injection - Crafting inputs that bypass safety filters in LLM-based tools

For large language models integrated into security workflows, prompt injection is a significant concern. Research from Carnegie Mellon demonstrates that carefully crafted inputs can bypass safety filters across different model providers.

Many other methods exist, including AFMI, discussed in this whitepaper, Anchored Fictional Multilingual Injection, 2025.

When LLMs connect to tools with real-world effects, such as file access, code execution, and ticketing systems, successful prompt injection moves from bad text output to unauthorized actions.

Defenses exist but aren't perfect.

Adversarial training, input preprocessing, action restrictions, and strict validation all help. None provide complete protection against adaptive adversaries. They raise the bar and require continuous evaluation.

Dependency and Overreliance on AI

The ISA Global Cybersecurity Alliance explicitly warns about overreliance on automation:

- False sense of security

- Missed zero-day threats

- Erosion of human expertise

- Failure when automated tools are bypassed

The Equifax breach illustrates the danger. Equifax failed to patch a known Apache Struts vulnerability despite having automated scanning in place. The breakdown wasn't a lack of tools - it was overreliance on those tools without sufficient human oversight.

Research shows operators tend to accept automated recommendations even when they conflict with other evidence. Heavy reliance on AI can degrade the critical thinking skills needed to override systems when they're wrong.

The World Economic Forum flags a systemic concern: widespread reliance on a small set of AI models or cloud providers could create correlated vulnerabilities across critical infrastructure.

The answer isn't avoiding AI. It's maintaining meaningful human oversight.

The EU AI Act requires that high-risk AI systems be designed so humans can understand the system's role, interpret outputs, and override when necessary. In your SOC, that means treating AI outputs as recommendations, not verdicts - and maintaining the skills to question them.

Ethical and Privacy Considerations

AI systems inherit biases from their training data.

In security contexts - fraud detection, access controls, insider threat scoring - this can mean higher false positive rates for particular demographic groups. Independent testing of UK police facial recognition found significantly higher error rates for certain populations. Similar risks apply to behavioral analytics and risk-scoring tools.

Opacity creates accountability challenges. When an AI system blocks a login or flags a user, explaining why can be difficult if the model is a black box.

Privacy concerns extend beyond bias. AI-driven security tools work best with extensive data: logs, network traffic, behavioral telemetry. The line between security monitoring and surveillance can blur if data collection isn't tightly scoped.

If you use AI-driven security tools, you need to be transparent about what data they collect and why.

Generative AI in security workflows adds exposure. Samsung banned employees from using public AI chatbots after staff submitted sensitive source code. In 2025, researchers disclosed that DeepSeek left a database exposed containing user chat histories and API keys.

Privacy-by-design principles apply: data minimization, local models where appropriate, strict access controls, and clear policies on third-party AI services.

CompTIA CySA+ Voucher

Prove your defense analyst skills with a discounted CompTIA CySA+ Voucher. Save up to 30% and earn your certification with an authorized CompTIA partner.

Will AI Replace Cyber Security Experts?

Given everything above, a natural question follows: where does this leave security professionals?

Short answer: AI won't replace you. The evidence points toward augmentation.

The global cyber security workforce gap stands at roughly 3.5 million unfilled positions. That shortage persists even as AI adoption accelerates.

A World Economic Forum article summarizing Fortinet research found that 87% of cyber security professionals expect AI to enhance their roles. Only 2% believe AI will fully replace them.

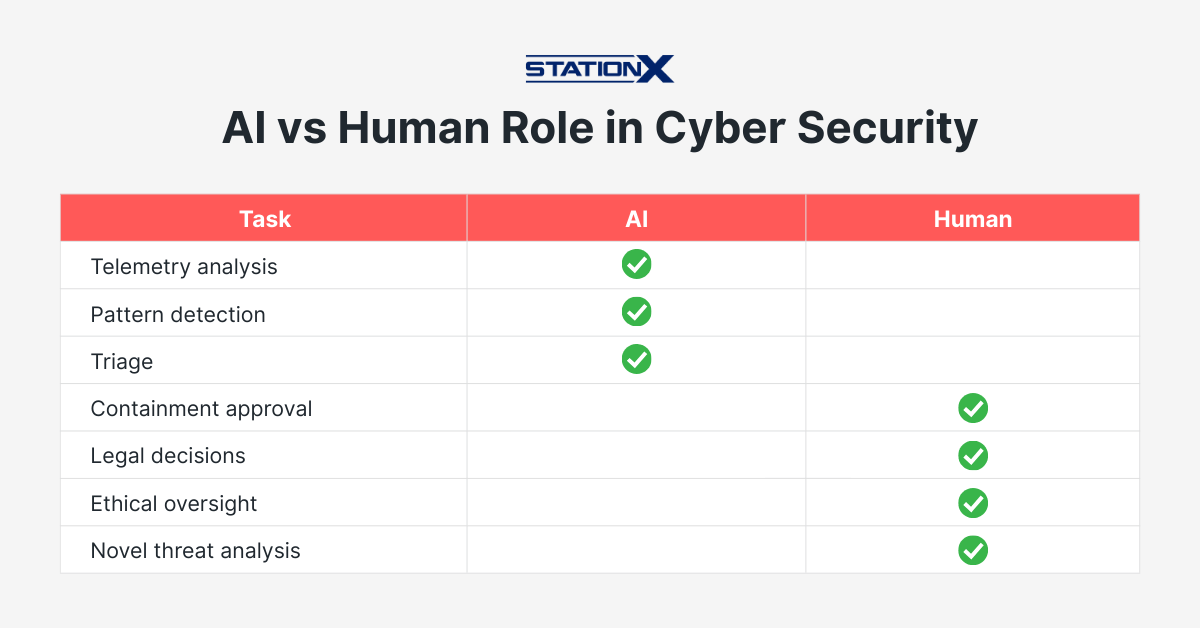

AI handles specific categories of work well: high-volume telemetry analysis, pattern recognition, alert triage, routine playbook execution. These tasks consume analyst time but don't require creative judgment.

Humans remain essential for novel cyber attacks that don't match training data, adversarial scenarios where attackers target AI weaknesses, and decisions with legal or business implications. "The AI said so" isn't an acceptable explanation when leadership asks why a particular call was made.

The roles are evolving. Traditional Tier-1 SOC work - monitoring alerts, initial triage - is increasingly automated. Analysts who advance will need skills in AI governance, model tuning, and adversarial testing.

The future belongs to human-AI collaboration. You'll work alongside these systems, not be replaced by them.

Future Trends of AI in Cyber Security

Several developments are shaping where AI in security is heading:

- Autonomous agents - Systems combining LLMs with tool access to perform multi-step workflows: investigating alerts, gathering context, executing containment, generating reports

- Maturing governance - The EU AI Act, NIST AI RMF, and ISO/IEC 42001 are pushing organizations toward disciplined AI deployment

- Adversarial adoption - State actors using generative AI for spear-phishing, malware that uses LLMs during execution to generate and obfuscate code dynamically

Microsoft and OpenAI reported that threat actors from multiple countries have begun using AI to craft phishing content and research vulnerabilities. Google's Threat Intelligence Group documented malware families incorporating LLMs into their execution chains.

The assumption going forward: AI on both sides. Defenders using it to detect and respond. Attackers using it to evade and accelerate.

Partnering with AI, not resisting it, will shape the next generation of cyber defense.

Conclusion

AI in security operations is no longer optional for organizations facing modern threat volumes. The technology provides measurable improvements in detection accuracy, response speed, and analyst efficiency when properly implemented.

The benefits are real but conditional. AI performs best with high-quality data, mature processes, and humans who understand both its capabilities and limitations.

For security professionals, the implications are clear: AI skills are becoming a baseline expectation. Understanding how to work with these tools, evaluate their outputs, and recognize their failure modes will separate those who advance from those who don't.

Those who learn to work with AI, not against it, will define the next generation of cyber defense.

To get a head start in cyber security, consider the StationX Masters Program, offering over 30,000 courses and labs, a personalized certification roadmap, career counciling, 1:1 mentorship, and much more.

Or, master cyber security from beginner to advanced with our Complete Cyber Security Course Bundle covering network security, ethical hacking, endpoint protection, and online anonymity. Click the banner below.

The Complete Cyber Security Course Bundle includes:

- The Complete Cyber Security Course! Volume 1: Hackers Exposed

- The Complete Cyber Security Course! Volume 2: Network Security

- The Complete Cyber Security Course! Volume 3: Anonymous Browsing

- The Complete Cyber Security Course! Volume 4: End Point Protection

- The Complete Cyber Security Course Practice Test - Volume 1