In an era where the internet shapes our daily lives, from social connections to political discourse, governments worldwide are grappling with how to regulate the digital Wild West. The United Kingdom's Online Safety Act 2023 stands as one of the most ambitious attempts to do just that.

Billed as a groundbreaking piece of legislation to protect users—especially children—from online harms, the UK Online Safety Act has sparked fierce debate since receiving Royal Assent on October 26, 2023. Now, with its phased implementation well underway, critics argue it's not just ineffective but actively harmful to privacy, free speech, and cybersecurity.

As the UK heads toward potential political shifts, questions loom: Is this Act a shield for the vulnerable, or a tool for overreach?

The Online Safety Act, often abbreviated as OSA, emerged from years of public outcry over issues like cyberbullying, child exploitation, and the spread of misinformation. Drafted under the previous Conservative government, it imposes duties on online platforms—including social media giants like Facebook, X (formerly Twitter), and TikTok—to proactively identify and mitigate risks.

Illegal content, such as child sexual abuse material or terrorist propaganda, must be swiftly removed, while "legal but harmful" content (e.g., hate speech or self-harm promotion) requires age-appropriate safeguards. Ofcom, the UK's communications regulator, enforces compliance through codes of practice, with penalties reaching up to £18 million or 10% of a company's global revenue.

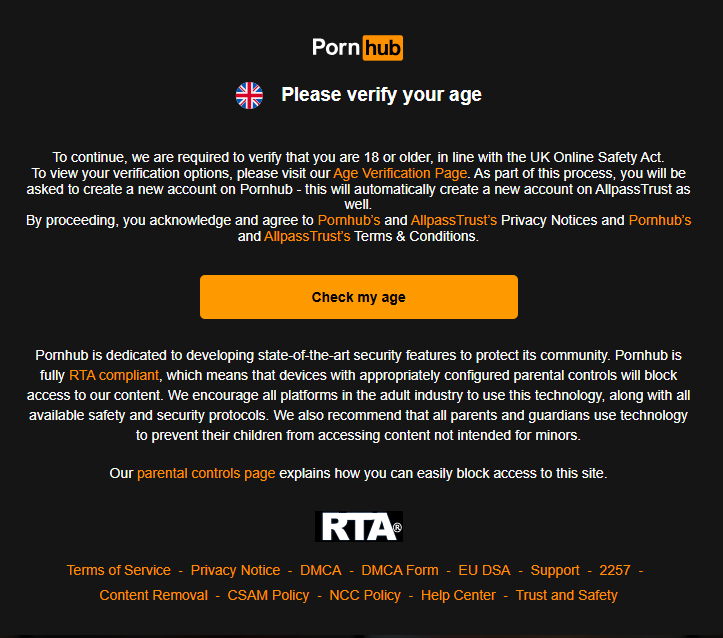

Implementation has been staggered to allow platforms time to adapt. Initial duties kicked in on January 10, 2024, focusing on child safety assessments. By March 17, 2025, platforms faced enforceable rules on illegal content, and as of July 25, 2025, "highly effective" age assurance measures—such as facial recognition or ID verification—became mandatory for sites hosting pornographic or harmful material.

Full enforcement is slated for 2026, with Ofcom issuing guidance on everything from content moderation to transparency reports. Recent government statements, including the Final Statement of Strategic Priorities released on July 2, 2025, emphasize tackling terrorism and foreign interference, aligning the Act with national security concerns.

Supporters hail the OSA as a long-overdue step toward a safer digital space. The government claims it will make the UK "the safest place in the world to be online," pointing to provisions that require platforms to conduct risk assessments and implement "safety by design" principles.

For instance, during the 2024 UK riots, proponents argued the Act could have helped curb inflammatory content that fueled unrest. London Mayor Sadiq Khan, while critiquing it as "not fit for purpose" in that context, called for rapid reviews to strengthen it, underscoring the law's intent to address real-world harms.

Yet, beneath this veneer of protection lies a chorus of damning criticisms from cybersecurity experts, civil liberties groups, and even political figures. Organizations like the Electronic Frontier Foundation (EFF) and researchers from University College London (UCL) have labeled the Act a "privacy disaster."

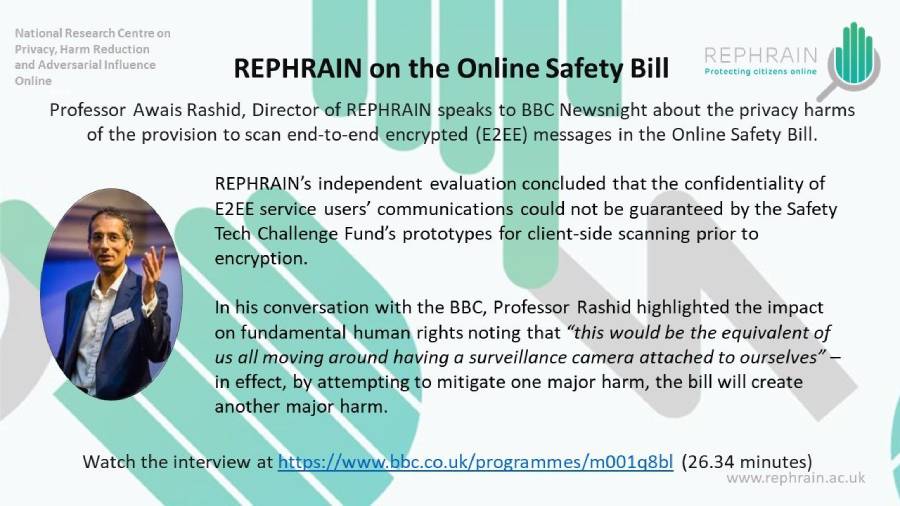

At its core, the law mandates scanning of private messages for illegal content, which experts say is incompatible with end-to-end encryption—a cornerstone of secure communication used by apps like WhatsApp and Signal. Professor Steven Murdoch of UCL has warned that client-side scanning could create "routine monitoring" of intimate conversations, effectively installing a "mandatory wiretap" on users' devices.

This erosion of encryption doesn't just threaten privacy; it actively worsens online security. By forcing platforms to store vast amounts of user data—such as age verification records and content logs—the Act creates "honey pots" attractive to hackers.

Professor Awais Rashid, director of the REPHRAIN research center, has highlighted the absence of robust safeguards, noting that such data hoarding increases vulnerability to breaches without guaranteeing safer outcomes.

Critics point out that AI-driven moderation tools, central to compliance, are prone to errors, leading to overblocking of legitimate content. Vulnerable groups, including LGBTQ+ communities discussing health issues, risk being silenced, fostering a "chilling effect" on free expression.

Moreover, the Act's vague definitions of "harmful" content open the door to government overreach. Ofcom's powers, influenced by ministerial directives on national security, could evolve into broader surveillance, setting a precedent for authoritarian regimes globally.

The Internet Society and Open Rights Group have decried this as "regulatory capture," where Big Tech influences policy while users bear the brunt. Recent analyses, such as FTI Consulting's June 2025 report, stress the impossible balance between "safety by design" and "privacy by design," arguing that the Act prioritizes the former at the expense of the latter.

Repeal efforts are gaining momentum amid these concerns. A parliamentary petition launched in 2025, calling for the Act's outright repeal, has amassed over 350,000 signatures as of July 28, 2025. Petitioners argue the law is "borderline dystopian," enabling unchecked state intrusion.

The UK government responded on the same day, affirming no plans to repeal and emphasizing ongoing collaboration with Ofcom for effective implementation. However, political challengers are seizing the issue: Reform UK, in a July 28, 2025, announcement, vowed to scrap the Act entirely if elected, branding it a threat to freedoms. This echoes broader discontent, with figures like Elon Musk criticizing similar regulations as censorship tools.

As the Act's full rollout approaches, its real-world impact remains under scrutiny. While it may deter some blatant harms, evidence from early phases—such as platforms struggling with compliance costs and algorithmic flaws—suggests it's falling short. A November 2024 report from CARE questioned whether the regulations suffice for deep-rooted issues like online child exploitation, while a September 2024 analysis by Global Partners Digital deemed it inadequate during crises like the riots.

In conclusion, the Online Safety Act 2023, despite its noble aims, represents a flawed overreach that sacrifices privacy and security on the altar of control. It doesn't make the internet safer; it makes it more vulnerable to surveillance and censorship. As voters, we have the power to demand better. In the next election, vote against parties that support this invasive law—back those who promise repeal.

Sign the petition at https://petition.parliament.uk/petitions/722903 and make your voice heard. Your digital freedom depends on it.